Tailwind 2.x recommends using PostCSS for its preprocessor. However, if you still want to use Sass the documentation isn’t the clearest on how to set it up.

There is a page but it doesn’t seem to give you the step by step breakdown needed - here document whats needed to use Sass with Tailwind 2.x and Laravel-Mix.

First rename the resources/css/app.css file to use the scss extension:

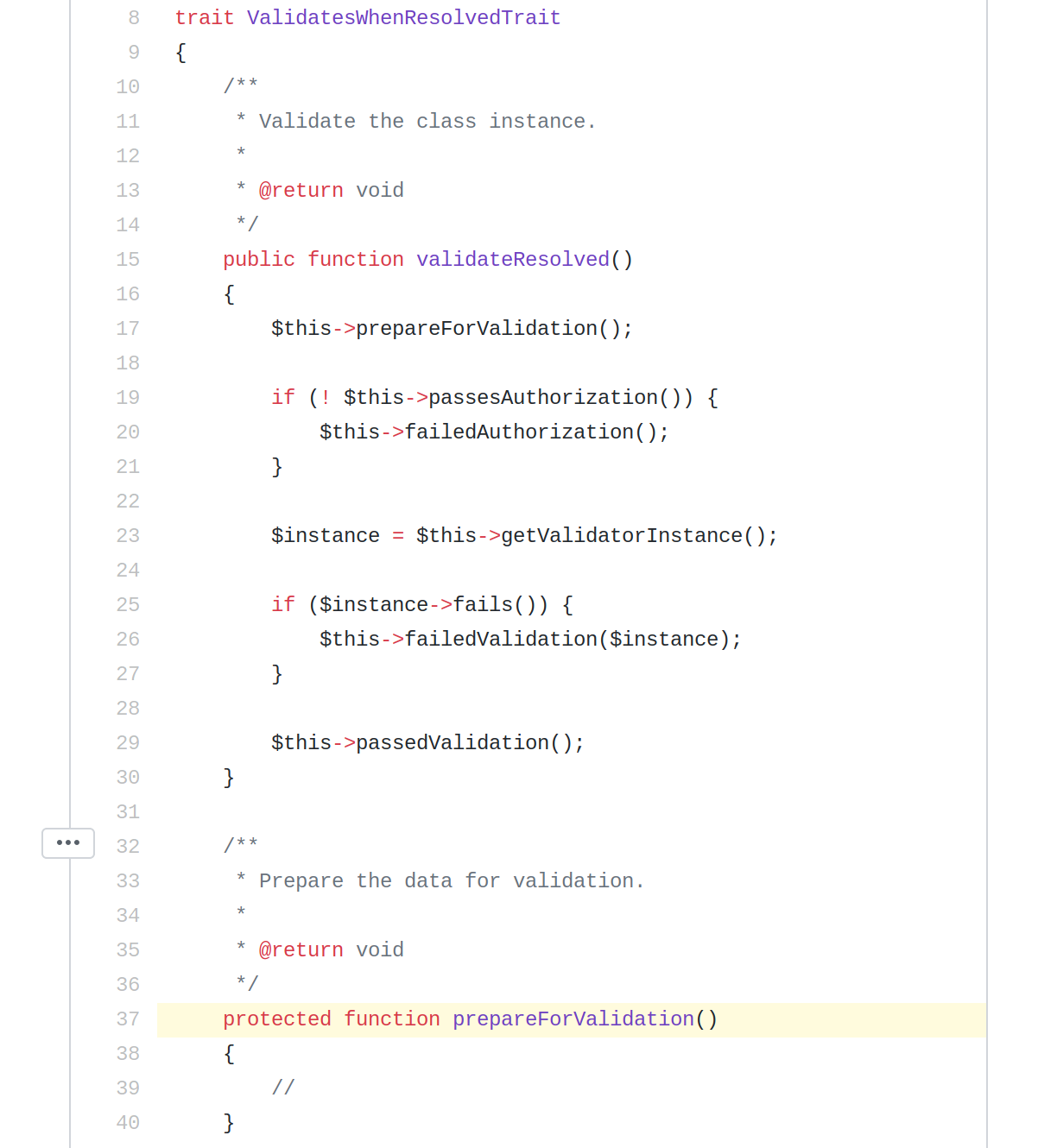

mv resources/css/app.css resources/css/app.scssNext remove the default postCss config within the webpack.mix.js config file:

// snip...

mix.postCss('resources/css/app.css', 'public/css', [

require('postcss-import'),

require('tailwindcss'),

require('autoprefixer')

])Now add the tailwind module and use the sass plugin and configure postCss to use the tailwind.config.js file:

const tailwindcss = require('tailwindcss')

// snip...

mix.sass('resources/css/app.scss', 'public/css')

.options({ postCss: [ tailwindcss('./tailwind.config.js')]})Thats it!

When you run npm run dev the needed sass and other js dependencies will be detected as missing and automatically installed.